August 12, 2025 – As artificial intelligence (AI) tools like ChatGPT become part of our everyday lives, from providing general information to helping with homework, one legal expert is raising a red flag: Are these tools quietly narrowing the way we see the world?

In a new article published in the Indiana Law Journal, Professor Michal Shur-Ofry from the Hebrew University of Jerusalem (HU) and a Visiting Faculty Fellow at the NYU Information Law Institute, warns that the tendency of our most advanced AI systems to produce generic, mainstream content could come at a cost.

“If everyone is getting the same kind of mainstream answers from AI, it may limit the variety of voices, narratives, and cultures we’re exposed to,” Prof. Shur-Ofry explains. “Over time, this can narrow our own world of thinkable thoughts.”

The article explores how large language models (LLMs), the artificial intelligence (AI) systems that generate text, tend to respond with the most popular content, even when asked questions that have multiple possible answers. One example in the study involved asking ChatGPT about important figures of the 19th century. The answers, which included figures like Abraham Lincoln, Charles Darwin, and Queen Victoria, were plausible–but often predictable, Anglo-centric, and repetitive. Likewise, when asked to name the best television series, the model’s answers centered around a short tail of Anglo-American hits, leaving out the rich world of series that are not in English.

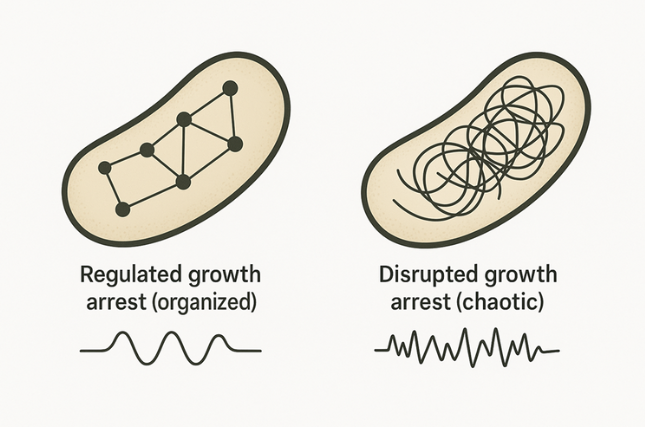

The reason is the way the models are built: they learn from massive amounts of digital datasets that are mostly in English and rely on statistical frequency to generate their answers. This means that the most common names, narratives, and perspectives will surface multiple times in the outputs they generate. While this might make AI responses helpful, it also means that less common information, including cultures of small communities that are not based on the English language, will often be left out. And because the outputs of LLMs become training materials for future generations of LLMs, in time, the “universe” these models project to us will become increasingly concentrated.

According to Prof. Shur-Ofry, this can have serious consequences. It can reduce cultural diversity, undermine social tolerance, harm democratic discourse, and adversely affect collective memory—the way communities remember their shared past.

So what’s the solution?

Prof. Shur-Ofry proposes a new legal and ethical principle in AI governance: multiplicity. This means AI systems should be designed to expose users or at least alert them to the existence of different options, content, and narratives, not just one “most popular” answer.

She also stresses the need for AI literacy, so that everyone will have a basic understanding of how LLMs work and why their outputs are likely to lean toward the popular and mainstream. This, she says, will “encourage people to ask follow-up questions, compare answers, and think critically about the information they’re receiving. It will help them see AI not as a single source of truth but as a tool and ‘push back’ to extract information that reflects the richness of human experience.”

The article suggests two practical steps to bring this idea to life:

- Build multiplicity into AI tools: for example, through a feature that allows users to easily raise the models’ “temperature”—a parameter that increases the diversity of generated content, or by clearly notifying users that other possible answers exist.

- Cultivate an ecosystem that supports a variety of AI systems, so users can easily get a “second opinion” by consulting different platforms.

In a follow-on collaboration with Dr. Yonatan Belinkov and Adir Rahamim from the Technion’s Computer Science department, and HU’s Bar Horowitz-Amsalem, Prof. Shur-Ofry and her collaborators are attempting to implement these ideas and present straightforward ways to increase the output diversity of LLMs.

“If we want AI to serve society, not just efficiency, we have to make room for complexity, nuance and diversity,” she says. “That’s what multiplicity is about, protecting the full spectrum of human experience in an AI-driven world.”

The research paper titled “Multiplicity as an AI Governance Principle” is now available in the Indiana Law Journal and can be accessed here.

Researchers:

Michal Shur Ofry

Institutions:

- Faculty of Law, the Hebrew University of Jerusalem

- Visiting Faculty Fellow, NYU Information Law Institute